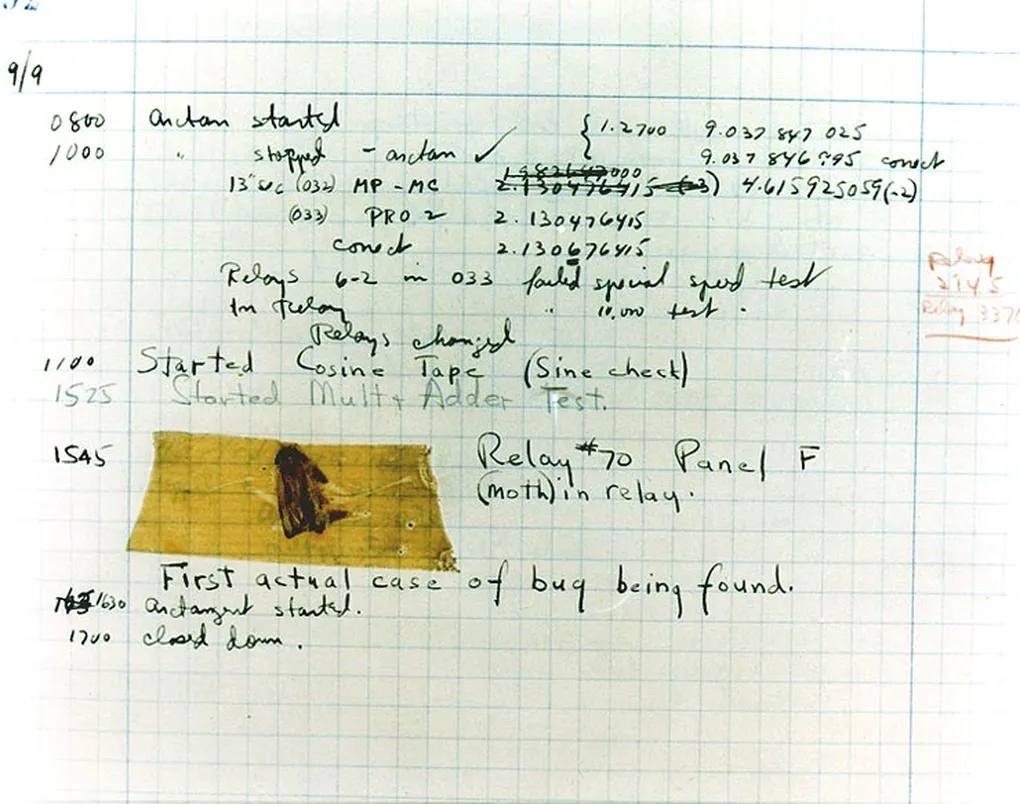

The term “bug” is deeply rooted in the history of computing, and its origin is often traced back to a specific event in 1947. At the time, the Mark II, one of the earliest computers, was being operated at Harvard University. This massive electromechanical machine relied on relays and switches to perform its calculations. But on one particular day, the operators encountered an unexpected problem: the machine was malfunctioning, and they couldn’t figure out why.

Upon further inspection, they discovered the culprit—a moth had found its way into one of the machine’s relays, causing it to jam. The operators carefully removed the moth, taped it into their logbook, and humorously noted that they had “debugged” the system, coining the term “bug” to describe the issue. Although the concept of mechanical glitches or errors wasn’t new, this incident gave the term a memorable and lasting association with problems in technology.

Since then, “bug” has become a staple in the lexicon of computer science, referring to any flaw, error, or glitch in software or hardware. While modern computers are far more advanced than the Mark II, and real insects are no longer the primary source of technical problems, the term endures, reminding us of the fascinating and occasionally quirky history of technology.